It has never been easier and safer to scale up a deployment. The virtualisation of the hardware in the cloud and all the software tools needed for rapidly deploying software on new virtual machines help in making a big step forward.

Let’s consider a telco example from our experience:

A complex application for subscription data provisioning in several systems either on the operator’s or the partners’ side. The application stands in between the operator and the partners and it is responsible for publishing changes of any subscriber data occurring between operator and partners. As there are differences between how various partners are handled, this may impact all or just a subset of subscribers. On the other hand, some subscriber data changes may be relevant to all the partners or to a subset of them.

Changes in the workflows may be needed by programming, this leading to frequent software updates. And if we deal with some 30 million subscribers and 20 partners, the application may be deployed in more than 40 instances, for which manual update is out of the question nowadays. We all know it, we’ve been there…

Not to mention that where we have 40 instances deployed, it’s likely that we’ll need to deploy even more to scale up the solution. In this case, it becomes critical to monitor the consumption of resources on all the virtual machines and if capacity needs to be increased, new virtual machines should be added as smoothly as deploying a new software release.

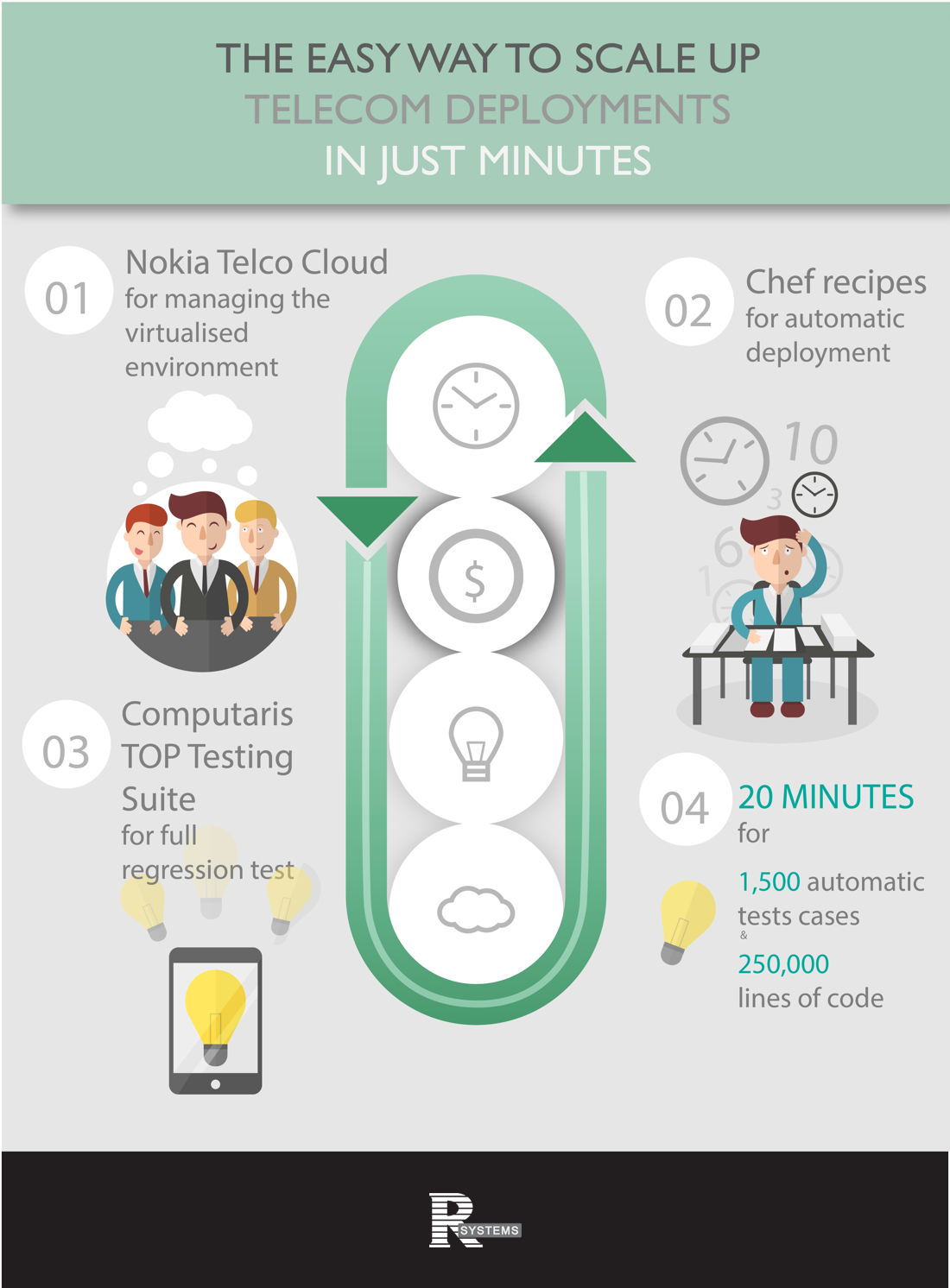

It’s all easy with Nokia Telco Cloud helping us manage the virtualised environment

Nokia has been a forerunner in developing telco cloud. We have developed and tested virtualised network elements and cloud application management with our customers and demonstrated the readiness of cloud technology for commercial deployment. Nokia’s core software and telco cloud management systems are hardware-independent and cloud platform agnostic, so operators can take full advantage of the cloud paradigm. Nokia is helping operators along their journey to Telco Cloud with a full life cycle of cloud wise services.

But what about the software which is deployed? With tens of programmers and complex deployment involving many software components deployed on subsets of the machines, disaster may become a daily business.

Unless … we follow a successful recipe to test and deploy automatically

For this, we have used two important technologies: Chef for automatic deployment and R Systems TOP Testing Suite for full regression test.

With Chef, you can automate how you build, deploy, and manage your infrastructure. Your infrastructure becomes as versionable, testable, and repeatable as application code. Chef server stores your recipes as well as other configuration data. The Chef client is installed on each server, virtual machine, container, or networking device you manage – we’ll call these nodes. The client periodically polls Chef server latest policy and state of your network. If anything on the node is out of date, the client brings it up to date.

We have made Chef recipes for deployment on our own test system and a couple of other recipes for deployment on the production and staging system at the customer site. Adding new virtual machines, the deployment of the right software component on the right virtual machine, the procedure for restart – all are easy when you have a recipe.

But how do we make sure that what we deploy is quality proof?

Quality assurance is mandatory for any company striving to find the right balance between time to market and development costs. R Systems created TOP, a suite of testing tools built around JMeter, that contains the core logic for smoothly defining, managing and handling a broad range of test plans. TOP Testing Suite is a cost-effective and straightforward tool for telecom software engineers, which can be applied at all stages of the product development and deployment processes

This is especially important for a deployment on tens of instances impacting millions of subscribers. Any simple mistake may be quite impactful, not to say a complete disaster. Performed manually, when one Quality Assurance tester can run a maximum of 20 tests on a good day, regression testing would normally take a week for critical tests alone. Not to mention the risk of human error after delivery, in the production deployment, on 40 servers.

We’ve done this before and managed to avoid disasters, but still:

Is there a way to automate changes, reduce deployment time and error risk?

In the specific case of our application for subscriber data provisioning (or publishing) we have made more than 1,500 automatic test cases, and we’re still adding to this number with every change that we do in the software. Now, running so many tests on a complex architecture and making sure that some test cases do not impact others requires a well thought architecture for testing purposes only. Therefore, we created a test setup of 20 instances of the target application under test, as well as corresponding simulators, so everything is tested regressively in just minutes.

All tests performed from the moment a programmer commits the source code change, including the compiling, deploying, automatic testing and the reporting, are done in only 20 minutes. And the whole solution has about 250,000 lines of code (not counting third party libraries). Quite impressive, isn’t it?